Mad Libs - Google Action

Working with: Matchbox Mobile, PRH, & Google

Key contributions: Initial Research, assessing the content, creating a strategy, mapping out the product, pitching concepts, Google Canvas visuals (using PRH assets), creating Lottie animations, SSML, snagging and refinement

Summary

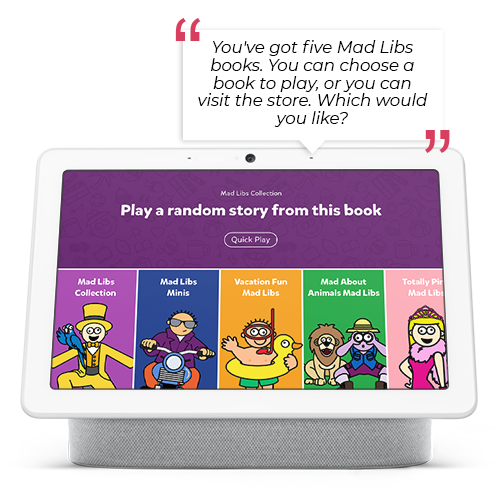

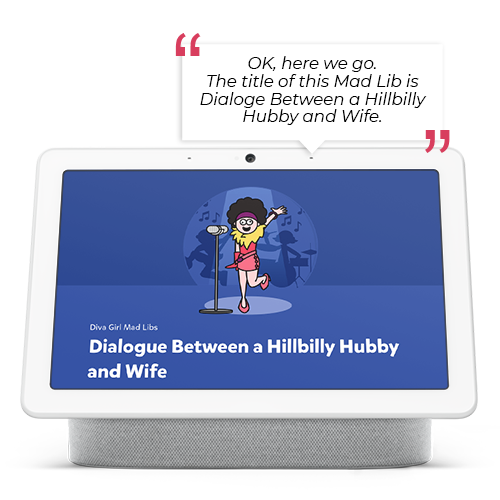

MadLibs is a popular word game where users are prompted to provide words that fill in the gaps within a story, which is then read aloud. Having already been turned into a successful voice experience on Alexa, Google asked PRH and Matchbox to further expand on the experience to showcase their new in-app purchase and Canvas visual features.

Research

I started this project with a kick-off meeting involving both Google & Penguin Random House to discuss goals, assumptions, and limitations. I then familiarised myself the existing brand assets and game play. Before reviewing the documentation related to Google Canvas & in-app purchases.

Findings

From my research, I learned some key findings which I translated into the following user stories:

To purchase a book the user will need to link the Action to their account > “As a user, I need to link my account before I make a purchase.”

PRH will be making 12 different books available in the Google Action > “As a user, I need to be able to pick a book to play from the options.”

2 of the books will be free, the other 10 will need to be purchased > “As a user, I want to know which books are free and which aren't.”

Not everyone knows what each word type is > “As a user, I need an explanation of what the word type is.”

People occasionally get stuck on a word > “As a user, I need a helping hand in coming up with a word.”

Although users like choice, sometimes they want to dive straight in and play > “As a user, I want to play a random book.”

Ideation

After I’d gained a thorough understanding of the product goals and the problems that I need to solve, I started sketching out ideas in the form of user scripts and wireframes.

A key area that I focused on was the book picker and how to provide options to the user without the barrier of reading out a long list. On Smart Display it could be possible for the user to scroll through the options on screen, however a way to pick a book without touch interaction was required for Voice Assistant users.

Once I was happy with the scripts there were sent to Google & PRH for final review. I then moved onto visuals which were created using existing brand assets that I reworked to suit a multi-modal interface.